Last week I was thinking about the 'Smart City' and how emerging technologies all have a designated space for when they are able to reach the adequate efficiency and scale. I've been reading Smart Cities by Anthony Townsend and learning about the history of tech giants such as IBM, Cisco and Siemens. The book talks about the technologies they invented, how they shaped cities and how they would like to shape the future of cities. It's a visionary—and sometimes scary—look into the future.

As these tech giants grow and aim to hyper-connect our world, Townsend also remembers the relationship of Ford and the cities, and how interstates were built over neighborhoods to connect the car to the city. Today's companies want to connect multiple technologies with the city, and although most of them offers amazing benefits, we must not forget that ultimately we build cities for humans. Humans are the ultimate reason why cities and their systems exist. Not only that, humans keep these systems going.

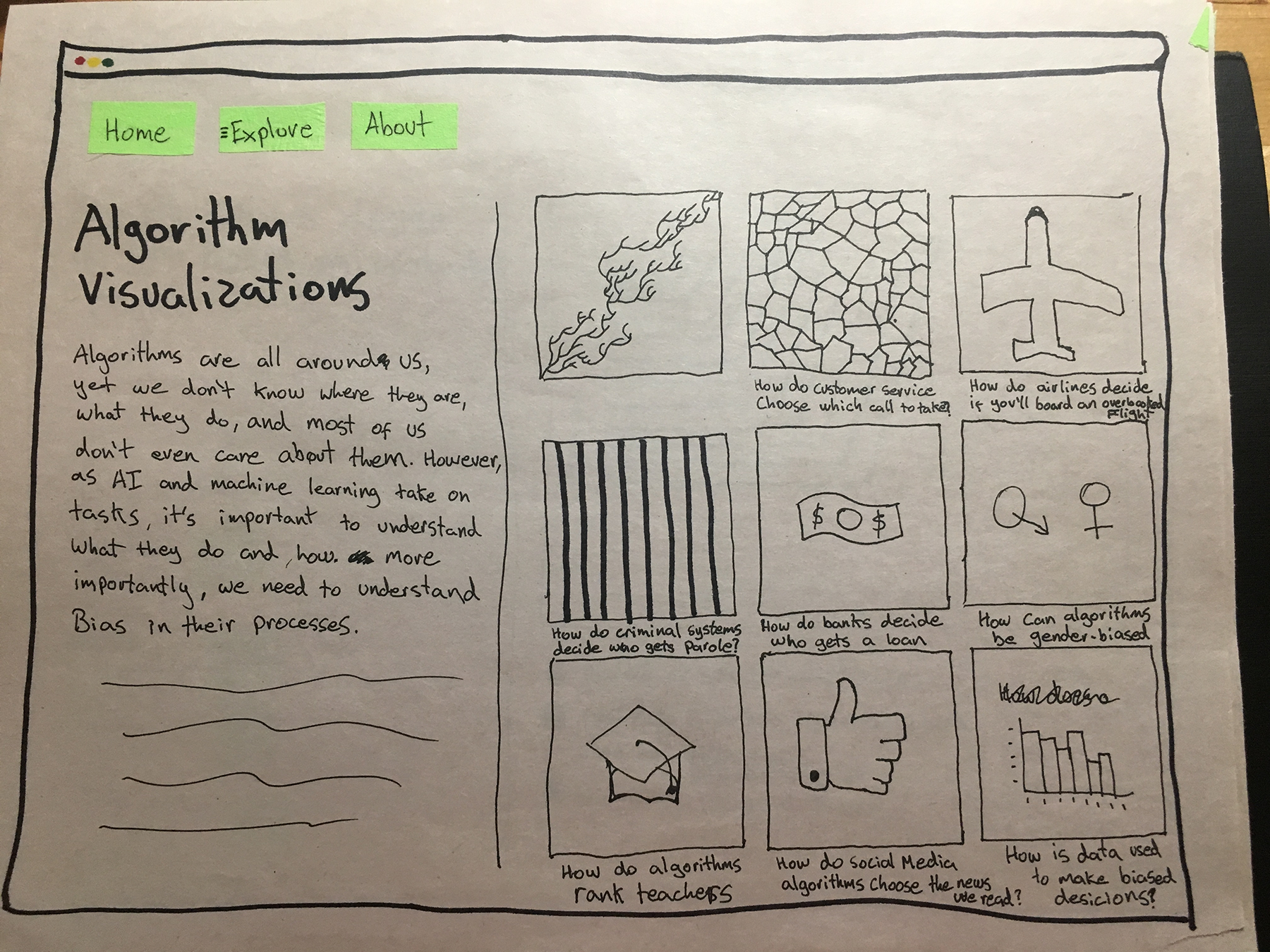

Deciding if and where these technologies should exist in the city means we first need to understand what they do and how they do it. Understanding this has led us to push for more transparency on how a software works (open source software) and how data is used (open data). This are movements that have existed for a while and their labor has led to a more open discussion on how software and data should be used by governments and companies. With the development of machine learning and its use in multiple new areas of society, I believe it's time we have a more open discussion about the algorithms that work behind the scenes.

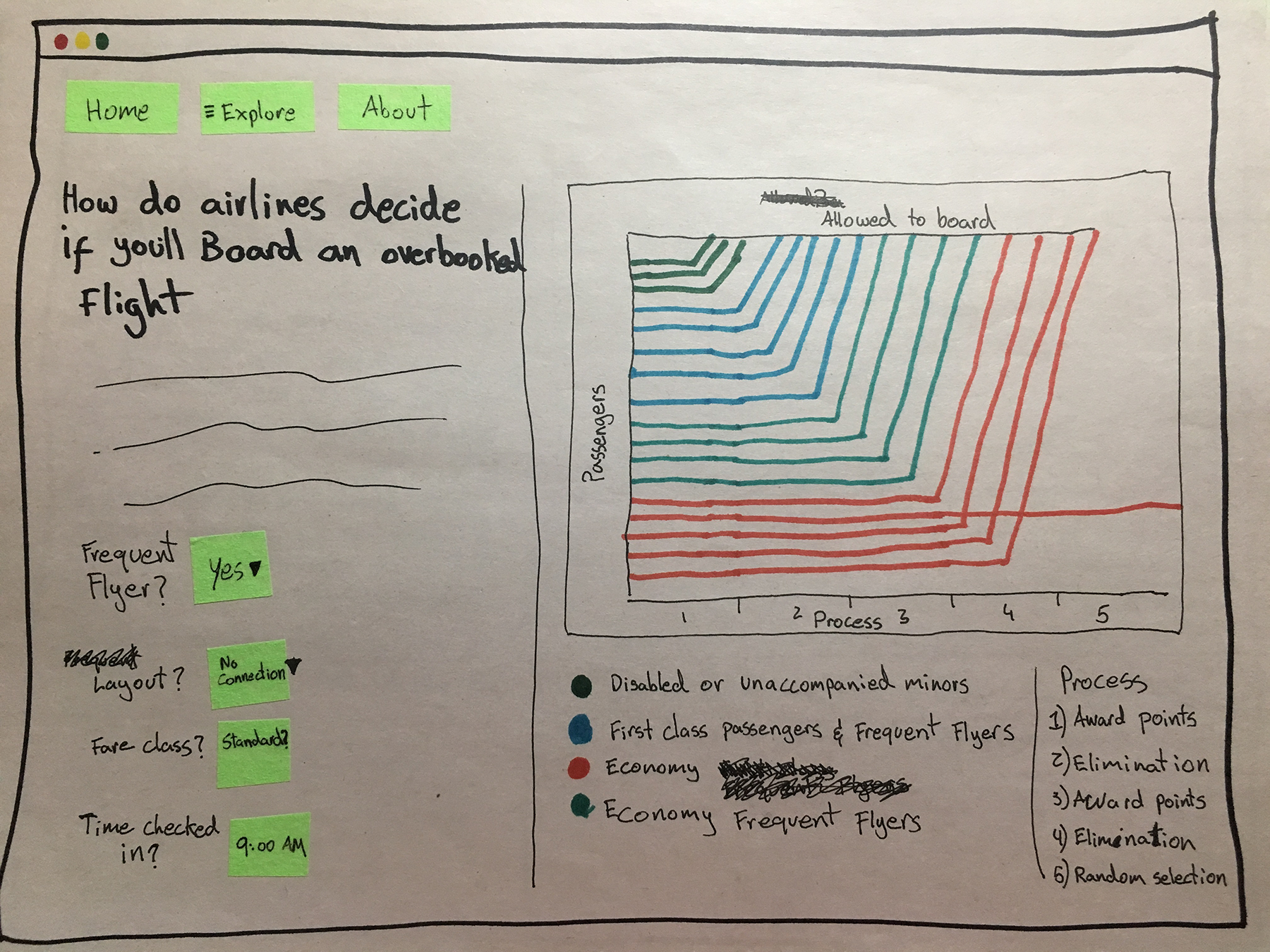

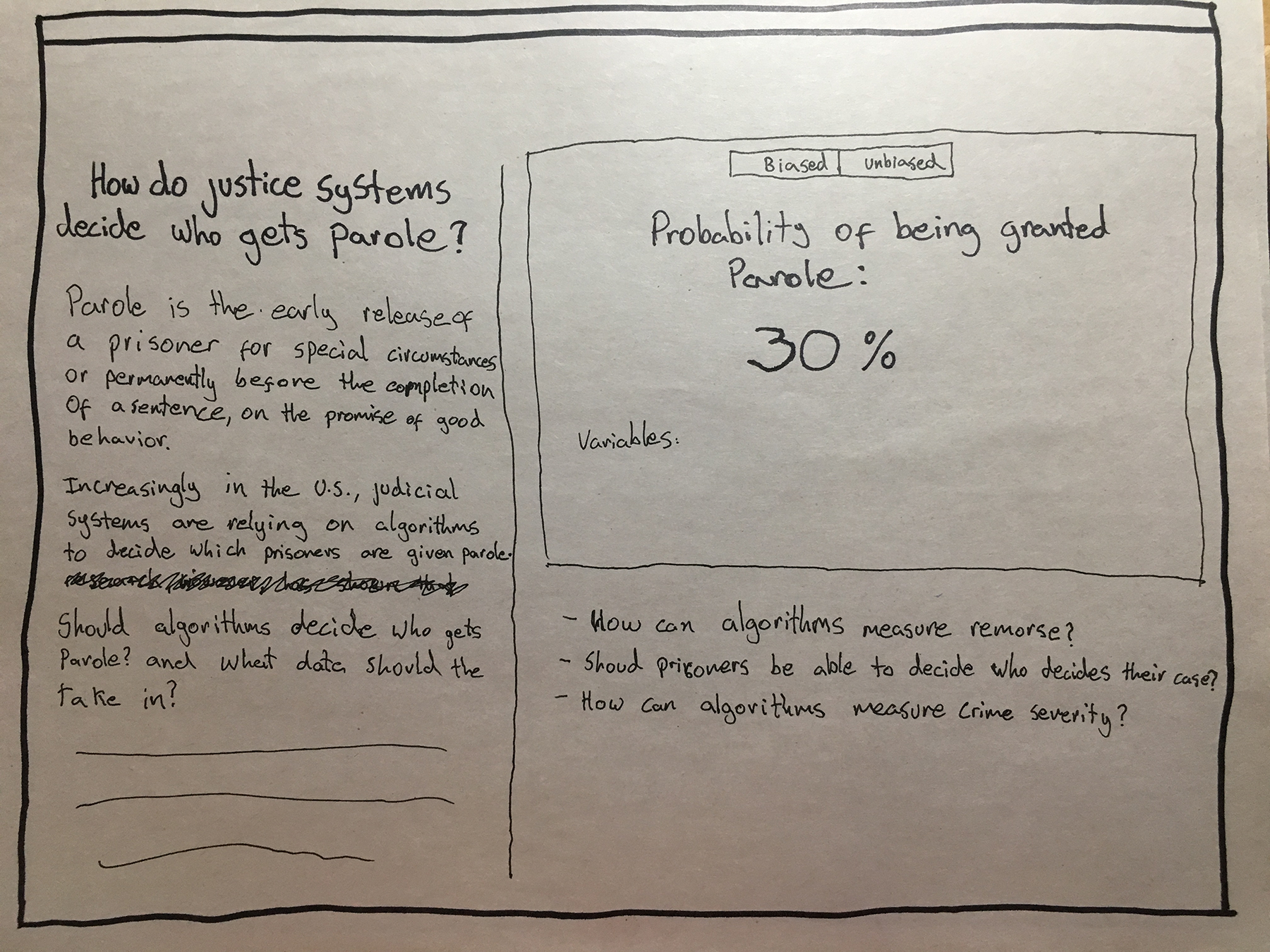

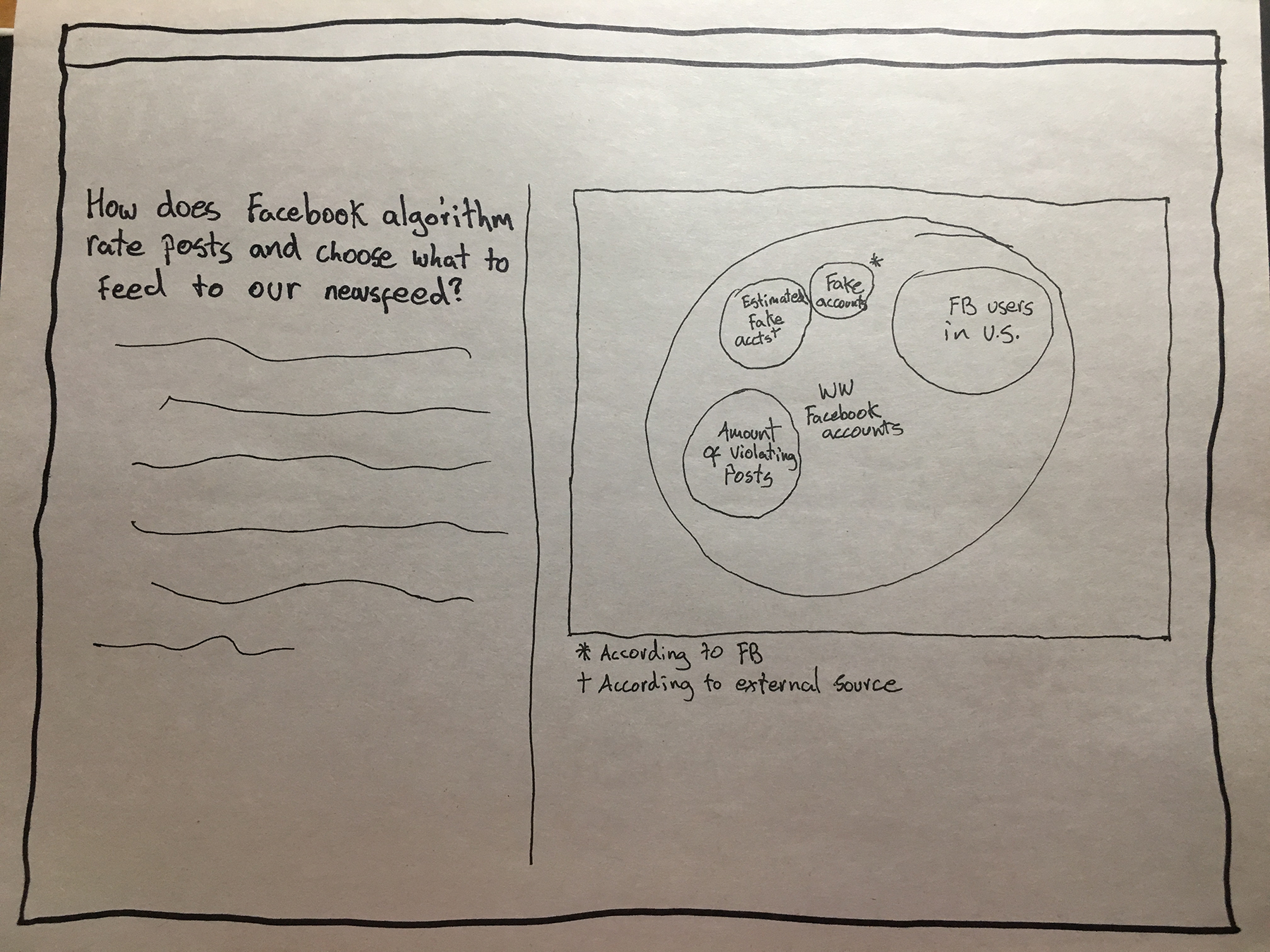

As the Technology Review points out Biased Algorithms Are Everywhere, and No One Seems to Care (Technology Review, 2017). It's important that we care about this algorithms, because they decide many important things in our lives, like who gets a loan, who gets parole and who is bumped from an overbooked flight. Furthermore, this month, September 2018, representatives from Facebook, Twitter and Google will talk to congress about "the role of the world’s largest technology platforms in the spread of political propaganda, extremism and disinformation" (Financial Times, 2018) amongst other things. However, Financial Times also points out that one of the key points congress should discuss with these companies is their use of algorithms and the level of transparency this formulas should have.

The time has come for Silicon Valley to open the black box and reveal how the algorithms work. Trust in democracy, and capitalism, depends on it. (Financial Times, 2018)

I am not a mathematician nor a physicist, so I don't understand algorithms that well either, therefore my question this week explores the question "How can we visualize algorithms that exist in our daily life in order to understand them better?"

I find this question extremely interesting, not only because of what it represents in terms of our relationship as humans with this technology, but also because of the aesthetic beauty of visualizing algorithms. Mike Bobstock, has an amazing talk at Eyeo 2014 on visualizing algorithms. All of this sources and information led me to create this prototype which aims to explore how algorithms in our daily life might be visualized in a web environment.